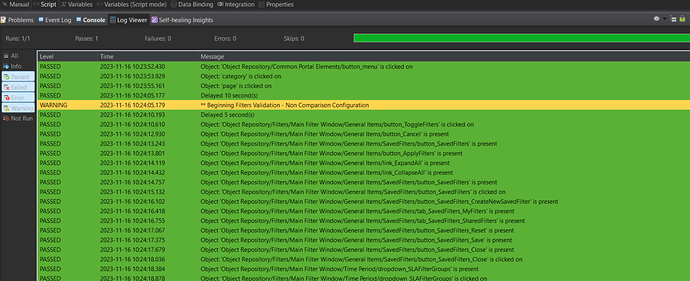

I’m trying to run a set of actions through a Katalon suite and every single step in the included scripts is taking exactly 30 seconds to run once the script hits a certain point, across all scripts, as seen here:

Additionally the Suite Results are showing a 30 second object timeout value from somewhere, even though the “default PageLoadTimeout” value is set to FALSE:

This makes zero sense, as the maximum object timeout as specified programmatically in the script is 20 seconds, as seen in the data mapping:

I have checked my Project Settings, and the default element timeout there is set to 10 seconds:

There is nothing in the suite logic that is non-default, so the timeout isn’t getting set here:

Additionally when I run the scripts included in the suite on their own they run perfectly fine, with no 30 second delay between steps:

None of my other suites are experiencing this issue, despite all suites being setup the same and using the same data files; I’ve even tried creating a brand new suite with these scripts and the same thing happens.

I can’t for the life of me figure out where this 30 second timeout override is coming from, nor how to get around it for this suite - any ideas are most welcome!

1 Like

Very strange.

This is likely not the solution but… if you have the log open in tree mode, close it (doesn’t look like you are looking at the screenshots). But also, stop looking at passes, they’re just noise.

My test cases certainly wait longer when running in suites but that’s because they know they’re running in a suite and I told them to wait longer. Do you have code like that? Maybe someone else added it and made a bad job of it?

And what version of Katalon are you using? OS?

Sorry, I skimmed past this on first reading (my bad).

So, one of your tests is causing this… and I think you know what you need to do, right?

- make a cope of the suite.

- remove tests one by one.

- rerun the suite

Alternative: Add tests one by one from the bad suite to a working suite.

This happens as soon as I add any of the tests in question to any suite, OS is Windows 11 and Katalon Version is 8.6.6

I’ve listed above all the code items that could be involved in setting this delay - i.e. - I can’t find any.

The suites and code are all mine, so no risk of someone inserting anything anywhere that I don’t know about

1 Like

Can you post one of the TCs in its entirety?

Will do, here’s one more oddity, I’m on Windows 11, yet Katalon shows me as being on Windows 10?

annnd the promised Test Case:

import static com.kms.katalon.core.testobject.ObjectRepository.findTestObject

import java.lang.CharSequence

import org.openqa.selenium.JavascriptExecutor

import com.kms.katalon.core.model.FailureHandling

import com.kms.katalon.core.testobject.TestObject

import com.kms.katalon.core.util.KeywordUtil

import com.kms.katalon.core.webui.keyword.WebUiBuiltInKeywords as WebUI

import groovy.time.TimeCategory

import org.openqa.selenium.By as By

import org.openqa.selenium.WebDriver as WebDriver

import org.openqa.selenium.WebElement as WebElement

import com.kms.katalon.core.webui.driver.DriverFactory as DriverFactory

import com.kms.katalon.core.webui.keyword.WebUiBuiltInKeywords as WebUI

import com.kms.katalon.core.testobject.ConditionType

import org.apache.commons.lang3.StringUtils as StringUtils

import btt_portal.*

//***************************************************************************

// Script Name: Page Validation Test - Page Validation Test - Tag & Content Governance - Overview - Filters

//

// Script Overview: Performs steps needed to validate that the RUM Real User Aggregate Waterfall page opens correctly and is fully populated

//

// Change History:

// Date User Change Made

// 06/27/2023 Kevin Jackey Initial Script Creation

//***************************************************************************

//***************** Object Definitions - Multi-Use ********************

// Keyword association variables

KeywordUtil log = new KeywordUtil()

Common_Functions common_functions= new Common_Functions()

Widgets element_check = new Widgets()

Filter_Element_Validation validate_filters = new Filter_Element_Validation()

Filters filters_validate = new Filters()

Login userLogin = new Login()

// Object identification variables

String element_name

String current_page = 'Tag & Content Governance -> Overview -> Filters Check'

// Object Error / Status Tracking Variables

def error_list = ""

def failure_handling

// Page Time Spent Tracking Variables

def current_page_spent_time = 0

int converted_object_timeout = Integer.valueOf(object_timeout)

int current_object_timeout = converted_object_timeout

def filter_object_list

//************************* Mark Script Execution Start ***********

log.markWarning("**** Beginning Execution of script: " + current_page )

///************************* Perform User Login ************************

//Determine if login has already occurred by checking for presence of User menu button

// If not found then Login to BT Portal'

userLogin.login(user_name, System.getenv('BTT_Automation_Pass'))

///*********** Navigate to RUM Real User Aggregate Waterfall page ****************

//Navigate to the RUM Real User Aggregate Waterfall page

Navigation menu = new Navigation()

//Only select Site if variable is populated

if (site_name > '') {

menu.select_site(site_name)

}

///Open Page and set the start_time variable

menu.select_menu_page('tag-and-content-governance-li', null , 'Overview', current_page)

//Begin Page Load Tracking Timer

def startDate = new Date()

start_time = startDate.getTime()

//log.logInfo ('Start time : ' + start_time)

//Set timeout variable to mirror script parameter

current_object_timeout = converted_object_timeout

WebUI.delay(10)

///************************* Perform Filter Element Validation for Non-Comparison Configuration **********************************

log.markWarning ("** Beginning Filters Validation - Non Comparison Configuration")

//Filter Elements should appear within 10 seconds

def filter_object_timeout = 10

// Open Filters Section

filters_validate.open_filters_window(current_object_timeout)

//Validate General Buttons Section

def validation_objects_general = ['cancel_button', 'saved_filters_button', 'apply_filters_button', 'expand_all_link', 'collapse_all_link']

error_list = filters_validate.validate_filters_general_elements(current_page, validation_objects_general, filter_object_timeout, error_list)

//Reduce object_timeout by time spent so far

current_object_timeout = common_functions.reduce_object_timeout (start_time, converted_object_timeout)

//Validate Saved Filters Section

def validation_objects_saved_filters = ['savedfilters_button','createnew_button', 'my_filters_tab', 'shared_filters_tab', 'reset_button', 'save_button', 'close_button']

error_list = filters_validate.validate_filters_savedfilters_elements(current_page, validation_objects_saved_filters, filter_object_timeout, error_list)

//Reduce object_timeout by time spent so far

current_object_timeout = common_functions.reduce_object_timeout (start_time, converted_object_timeout)

//Validate Time Period Section

def validate_filters_timeperiod_elements = ['sla_filter_groups_dropdown']

error_list = filters_validate.validate_filters_timeperiod_elements(current_page, validate_filters_timeperiod_elements, filter_object_timeout, error_list)

// Close Filters Section

filters_validate.close_filters_window(current_object_timeout)

////**************************************** Generate Page Run Error If Needed ***********************************************************************

//Generate run error if global error result failed

if (error_list != "") {

log.markFailed ("Errors found in the following items during run execution of script " + current_page + ": " + error_list)

} else {

log.markPassed ('No errors found during run execution of script ' + current_page)

}

return error_list

return error_list

Are you using CallTestCase?

That was something we’ve been experimenting with, but are not using in this instance

Will try removing the return statement above as well as trying only using a script in the middle of the test set batch and see if that helps.

Still not understanding where the 30 second timeout is coming from though

looks like some default value somewhere.

as you seems to already checked the testcase scripts (and hopefully the test suites) , next to check will be… mhm, by chance, are you using any test listener?

or a tear-up / tear-down script in your project?

No setup / teardown script, we are using a test case listener but it’s fairly straightforward and we aren’t seeing the behavior from any other tests that would all be using the same listener:

import com.kms.katalon.core.annotation.AfterTestCase

import com.kms.katalon.core.annotation.BeforeTestCase

import com.kms.katalon.core.configuration.RunConfiguration

import com.kms.katalon.core.context.TestCaseContext

import btt_portal.Common_Functions

class NewTestListener {

/**

* Executes before every test case starts.

* @param testCaseContext related information of the executed test case.

*/

@BeforeTestCase

def sampleBeforeTestCase(TestCaseContext testCaseContext) {

Common_Functions sites = new Common_Functions()

sites.create_global_variable('test_case', testCaseContext.getTestCaseId())

}

/**

* Executes after every test case ends.

* @param testCaseContext related information of the executed test case.

*/

@AfterTestCase

def sampleAfterTestCase(TestCaseContext testCaseContext) {

println testCaseContext.getTestCaseId()

println testCaseContext.getTestCaseStatus()

}

}

Any waits around calls run in the browser (js) like XHR?

While I’m waiting for your answer…

Let me see if I have this correct: if you take one of the TCs from the problem suite and place it in another suite that does NOT have problems, it too will suddenly start waiting 30 secs.

Correct?

No class like that in the sections that are hanging

Getting closer, it does appear to be limited to one or two of the scripts (there are 16 total in the suite), still working on isolating now; however the code provided earlier is on of the “trigger scripts” though I can’t for the life of me figure out why

Keep going. I’m now hooked waiting for the next “episode”

@kevin.jackey

Do you have “Smart Wait” enabled?

If yes, try disabling it.

1 Like

Let me show you a running code that demonstrates how “Smart Wait” could force your test case to take unexpectedly long time.

Here is a test case:

import org.apache.commons.lang3.time.DurationFormatUtils

import org.apache.commons.lang3.time.StopWatch

import com.kms.katalon.core.testobject.ConditionType

import com.kms.katalon.core.testobject.TestObject

import com.kms.katalon.core.webui.keyword.WebUiBuiltInKeywords as WebUI

TestObject makeTestObject(String xpathTemplate, Map<String, String> variables) {

def groovyscript = '"""' + xpathTemplate + '"""'

def shell = new GroovyShell(new Binding(variables))

String expr = shell.evaluate(groovyscript)

TestObject tObj = new TestObject(expr)

tObj.addProperty("xpath", ConditionType.EQUALS, expr)

return tObj

}

StopWatch sp = new StopWatch()

sp.start()

WebUI.openBrowser('https://www.katalon.com/')

WebUI.verifyElementPresent(makeTestObject('//a[contains(.,"Start free trial")]', [:]), 10)

WebUI.closeBrowser()

sp.stop()

println DurationFormatUtils.formatDurationHMS(sp.getTime())

If you run it with “Smart Wait” disabled, you will see

2023-11-17 09:11:00.659 INFO c.k.katalon.core.main.TestCaseExecutor - --------------------

2023-11-17 09:11:00.660 INFO c.k.katalon.core.main.TestCaseExecutor - START Test Cases/visitKatalonDotComWithSmartWait

2023-11-17 09:11:01.032 INFO

...

00:00:08.883

2023-11-17 09:11:09.807 INFO c.k.katalon.core.main.TestCaseExecutor - END Test Cases/visitKatalonDotComWithSmartWait

It took less than 9 seconds. It is normal.

Now you want to try enable Smart Wait and run the same test. Occationally you would see somthing like …

00:00:29.192

But sometimes you may see the test finishes just as nomal. Or, it may take over 1 minutes. The duration varies occationally. I guess the behavior of Smart Wait depends on how the target URL http://katalon.com/ behaves internally (if any AJAX events continue to flow, when it stops); and I have no idea about that URL.

I wondered if @kevin.jackey is aware of the Smart Wait. So I thought I should let him know.

If you want to know more about how Smart Wait is implemented, please refer to Stale element not found, is this relate to using same Object? - #95 by Brandon_Hein

1 Like