Hi,

I am trying to use markWarning from a keyword I have created and it does not appear to do anything. I can replace it with markPassed, logInfo, etc and it works as expected. Any idea what could be going on? I can use markWarning from a script but not a keyword.

Thanks,

Matt

try {

JSONAssert.assertEquals(response2.getResponseBodyContent(), response1.getResponseBodyContent(), JSONCompareMode.LENIENT)

} catch(AssertionError e) {

KeywordUtil.markWarning("JSONAssert Mismatches: " + e.toString())

}

I use markWarning all day everyday, thousands and thousands of times - from a Keyword, too.

I actually use them as part of my reporting utility - it prints out the steps from my tests.

I think we need to see more of your code and any errors you are seeing.

Hi @Russ_Thomas,

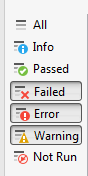

The screenshot below shows the code within the keyword. You can see in the log viewer that only the logInfo statement is writing to the log. The statements are identical except markWarning vs LogInfo. markWarning works when run from the test.

Thanks,

Matt

I notice you’re using Tree View. Try turning it off (I’m wondering if Katalon raises it elsewhere).

@Russ_Thomas,

Ok, so that worked and I can see the warning now…thanks! This is the first time that I have utilized warnings. I have hundreds of tests that use this keyword and I would like to know when these warnings occur without having to dig through each set of results. Do you have any recommendations? I also execute through Jenkins if there are any options there. I suppose I could log each occurrence to a text file?

Thanks,

Matt

Not recommendations, per se – My use of markWarning is wholly non-standard.

That’s precisely what I do (see my second screenshot above). The text file is then read back in to a second HTML file and presented in the browser with the text report inserted into an HTML <textarea>.

But like I said, that’s non-standard use of regular Katalon APIs. Warnings, as you can see, are benign. Being yellow, they stand out from the general noise of loginfo messages. I keep my log viewer like this:

I only depress “Info” when I’m investigating an issue more closely.

In answer to the question, “What if you get a real warning? Aren’t yours obscuring real warnings?”…

All apart from “This test passed”, they are indented by a half-dozen spaces. Regular warnings are not, so no, I don’t have that problem. Regular warnings sit back hard left on the line(s).

That’s precisely why I work this way. I’m in control and I want access to fails first and quickly. For working tests, all the rest of the info is just noise.

I can’t hand-on-heart recommend you work the way I do. I’m a relatively competent programmer and generally able to fix issues unaided. The last thing I would want is for someone else to try to copy my strategies and expect similar outcomes. That said…

If you’re comfortable writing warnings/messages/reports to a text file, go for it. I, along with many other knowledgeable programmers, am usually around if you get stuck.

@Russ_Thomas,

Thanks for your detailed response. A little background info:

So I am comparing two JSON responses. They can differ to some degree due to caching and environment differences. I am using JSONAssert customizations to account for these by ignoring attributes, providing a range, etc. This is working fine but my team lead would like for me to flag mismatches so they can view them as needed. So I am running once with the customizations and then again using error handling to capture the failures . I can easily run a few hundred of these at a time so being able to see where the warnings are would be very helpful. I guess I will work on creating a text log of warnings for each test suite.

//compare customizations

switch(apiName) {

case “aAPI":

JSONAssert.assertEquals(response2.getResponseBodyContent(), response1.getResponseBodyContent(),

new CustomComparator(JSONCompareMode.LENIENT,

new Customization("**.TimeStamp", {a, b -> true})

));

break;

case “bAPI":

JSONAssert.assertEquals(response2.getResponseBodyContent(), response1.getResponseBodyContent(),

new CustomComparator(JSONCompareMode.STRICT,

new Customization(“**.aBaseLink", {a, b -> true}),

new Customization(“**.bBaseLink", {a, b -> true})

));

break;

case “cAPI":

JSONAssert.assertEquals(response2.getResponseBodyContent(), response1.getResponseBodyContent(),

new CustomComparator(JSONCompareMode.STRICT,

new Customization("**.LocalObservationDateTime", {a, b -> a.substring(1,8) == b.substring(1,8)})

));

break;

default:

JSONAssert.assertEquals(response2.getResponseBodyContent(), response1.getResponseBodyContent(), JSONCompareMode.LENIENT)

break;

}

//Run with no customization and report mismatches as warnings

try {

JSONAssert.assertEquals(response2.getResponseBodyContent(), response1.getResponseBodyContent(), JSONCompareMode.LENIENT)

} catch(AssertionError e) {

GlobalVariable.json_assert_mismatches = e.toString()

KeywordUtil.markWarning("JSONAssert Mismatches Warning: " + e.toString())

//add code to write to log file

}

Thanks,

Matt

I’m not familiar with those APIs but it all look sensible from here.

I hope I haven’t led you astray though - watching my “yellow block” go by is only something I do while developing/running individual tests.

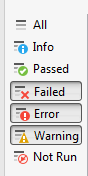

Normally, I’m running suites (usually overnight) in which case, I’m looking for Failed/Error results (I release the Warning button to save all that stuff appearing). Once a suite completes, I’m looking for fails/errors (red). Plus, another tool (an adapted test case) analyzes the report file picking out fails only, and pretty-printing those separately:

As you can see, fails/errors only (all else is noise). We run a tight ship, time staring at pass rates is time wasted for us. The “Error at or near NNN” stuff pertains to the step numbers you saw in my yellow faux-warnings block which brings us full circle.